by Sabrina Bodson, Lisa Renard, Vinciane Istace, Alina Vaduva & Kirk Chang

Artificial intelligence (AI) is an innovative and smart technology, helping managers and employees in delivering better-quality service and performance. Organizations nowadays have incorporated AI into their businesses and activities, such as energy saving, marketing strategies, climate forecast, risk prevention, customer supporting services, and problem solving in business operations. AI also has exerted its influence into the management practices, such as performance tracking, holistic-process personnel management, development of automatable jobs, employee retention and attrition management, employee emotion management, employee rights and ethics management and burnout prevention. In a nutshell, AI has earned its reputation in the field of people management.

The concept of inclusive workplace has drawn public attention recently, particularly when it aims to bring merits to the individuals, teams and the organization. For instance, in the inclusive workplace, employees feel integrated, valued and accepted in their organization, without having to conform (The British Academy of Management). Inclusive organizations support employees, regardless of their background or circumstance, to thrive at work (CIPD). That is, when employees feel included, they feel a sense of belonging that drives increased positive performance results and creates collaborative teams who are more innovative and engaging. Employees that feel included are more likely to be positively engaged with the managers and colleagues, leading to higher job commitment and better performance outcomes. Despite of the importance of inclusive workplace, unfortunately, the reality is far crueler and different types of discrimination still prevail in the workplace.

Following the great amount of merits and potential embedded in the inclusive workplace, we wonder whether AI could be adopted into the people management policies and practices, aiming to make the workplace more inclusive, healthy and enjoyable? Based on our knowledge, the answer is not very clear, as scholars and managers tend to have mixed views about it. Having said this, however, we have found some preliminary evidence, showing the lights to the direction of solution.

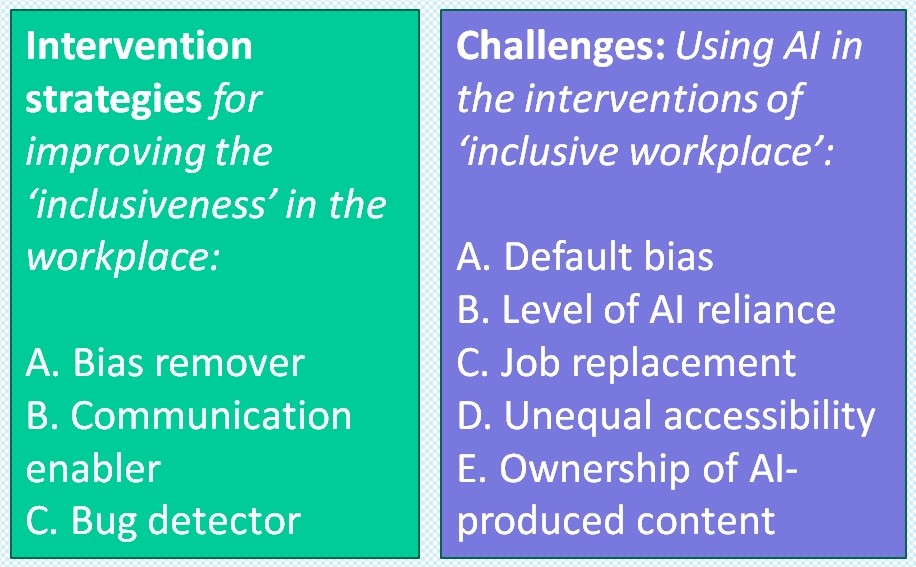

To begin with, AI could be adopted as bias remover. AI could mitigate unconscious bias in recruitment. AI-powered tools help recruit people from various backgrounds by removing human biases from the process. These tools analyze and evaluate job applicants using algorithms, which can be designed to focus on specific skills, qualifications, and experiences rather than factors that might be influenced by unconscious biases, such as an applicant’s name, age, or appearance. By using data-driven methods to assess candidates, well-designed AI recruitment tools could create a more objective selection process and help employers identify a diverse range of talent.

Next, AI language models like ChatGPT can be used to improve workplace communication, in which both efficiency and efficacy are improved. For instance, by providing real-time feedback, these models can help employees develop more inclusive communication habits, fostering a more welcoming workplace culture. AI-empowered language models also can support the existing communication channels with better quality (e.g., less mistakes, more clarity), efficiency (e.g., summarize the information quicker and disseminate the summarized knowledge faster), and transparency (e.g., who instruct the task at where, when and how).

From the perspective of bug detector, more specifically, AI can identify patterns of bias within the team, and organization. By analyzing vast amounts of data, such as pay disparities, promotion rates, or job satisfaction scores, AI systems can pinpoint areas where EDI interventions are needed. This enables organizations to make data-driven decisions to address EDI issues more effectively. By using data-driven methods to scrutinize the information processing, AI-empowered tools can demonstrate error-free functions (e.g., analyses, calculation, compilation jobs). The self-learning (e.g., problem identification and self-rectification process) is definitely a winner.

However, when adopting AI to the design and implementation of inclusive workplace, one must be cautious about the potential challenges in AI application. These are, for instance:

- Default bias: Inherent bias in AI systems, unintentionally embedded in AI algorithms because of biases present in training data. Or, bias from the AI designers (unawareness).

- Level of AI reliance: To what extent is human intervention still needed or required? Who should make contextual judgements and ensure that AI-driven recommendations align with an organization’s EDI goals (e.g., policies, regulations, practices)?

- Job replacement: Can AI disproportionately affect certain sectors of the workforce, such as manual- and routine-tasks? What might happen to the redundant? Who’s liability?

- Unequal accessibility: Unequal access to AI tools may create a divide among job applicants, e.g., applicants with or without AI? compete for jobs? further pressurizing the unemployed groups? Fairness and ethics in recruitment ?

While AI has great potential to enrich the work environment, facilitating the employee inclusiveness and engagement, there are nonetheless issues and concerns about the AI’s applicability and its implications on people management. For instance, when things go wrong, who has the liability? The employer, the AI user, or the AI developer? Who should monitor this? At what level? By whom authority? What legal framework can be used? Can AI become the victim?

Although many questions remain pending, the EU has made a major step towards regulating AI when the EU Parliament adopted in June 2023 the proposal for an “Artificial Intelligence Act” (the EU AI Act) with the aim of regulating AI for compliance with EU values and fundamental rights, addressing some of the aforementioned questions from a legal perspective.

The EU AI Act takes a risk-based approach, defining more or less stringent rules depending on the level of risks resulting from AI: (i) unacceptable risk AI systems, posing a threat to people and therefore banned, such as social scoring or real time identification systems, (ii) high risk, for AI systems which may negatively affect people’s safety or fundamental rights, (iii) generative AI which mostly has to comply with transparency requirements and (iv) limited risk, such as systems which generate or manipulate image, audio, or video content where the users shall be made aware that they are interacting with AI.

Employers will particularly be impacted as the use of AI in “Employment, worker management and access to self-employment” is classified as high risk and will therefore be subject to stringent rules to ensure the safeguard of fundamental rights. Such AI systems will have to be registered in an EU data-base managed by the EU Commission and be subject to a conformity assessment before being placed on the market or put into service. Furthermore, high-risk AI systems will be subject to transparency requirements the purpose of which is to ensure that stakeholders are able to understand and use the AI system appropriately, understand what data it processes and ultimately allow the deployer to explain to the affected person the decisions taken, using the AI system’s output. Additional requirements will include risk management, appropriate data governance and management practices including appropriate measures to detect, prevent and mitigate possible biases as well as human oversight with the aim of preventing or minimizing the risks to fundamental rights, especially where decisions based on automated processing by AI systems produce significant effects on the persons on which the system is to be used.

As for control measures, a national supervisory authority shall be designated to supervise the implementation and application of the EU AI Act, noting that every natural (group of) persons will have a right to lodge a complaint with a national supervisory authority where they consider that the AI system relating to them infringes the regulation, which could lead to penalties up to EUR 40,000 or 7% of the turnover if the offender is a company, whichever is higher. Such authority shall be completely independent and act as a point of contact for the public and other counterparts at Member State and Union levels. On top of that, other administrative or judicial remedies will remain applicable/enforceable.

Where employers use and rely on AI, their role, responsibilities and obligations should be analyzed in light of the challenges it raises, having already in sight the EU AI Act. Employers shall furthermore ensure, while using AI, compliance with other applicable legal requirements, including but not limited to the provisions of the Labour Code, the principle of execution of the contracts in good faith and the provisions of the General Data Protection Regulation (the GDPR).

Furthermore, on top of the EU AI Act, the EU Commission presented the EU AI Liability Directive proposal in September 2022, which aims to harmonize non-contractual civil liability rules for AI, which foresees under some circumstances, inter alia, the obligation to disclose compliance of the AI systems with the AI Act and some rebuttable presumption of casual link between the fault consisting in breaching the aforementioned requirements set by the EU AI Act and the prejudice suffered.

While the legal framework may bring some comfort in the future, the EU AI Act and the AI Liability Directive should in principle only be fully adopted no earlier than 2024 at EU level, with obligation of national implementation no earlier than 2026. As rightfully pointed by EU Commissioner Thierry Breton, though: “the challenge is to act quickly and take responsibility for exploiting all the advances while controlling the risks“. Hence, as “now is not the time to press the pause button“, Member States – and EU employers – would benefit from taking the matter into their own hands sooner than later. To our knowledge, no initiatives have yet been taken in Luxembourg to legislate on the topic. However, starting to implement measures nationally could prove to be an efficient way of easing the transition and managing the already omnipresent AI use.

Many questions will hopefully find answers with the upcoming legal framework, but as promising as it sounds, some challenges and potential issues still need to be addressed. If we contemplate how AI can be used as a bug detector, the fact remains that AI is man-made intelligence and thus may perpetuate former human biases if not properly designed. Therefore, it is up to humans – and particularly employers – to work on reducing these biases by ensuring that no discrimination of any sort is perpetuated, which implies a throughout analysis and “cleaning” of the source data used and a due diligence on past practices of the company.

Other legitimate fears revolve around job replacement. Reassurance should be found in the fact that human sight in the use of AI will remain required, in a way that AI could also in fact bring new opportunities: as data protection officers appeared with GDPR, AI’s development will likely create new positions, customized to meet the need for human oversight. Employees already using AI systems, on the other hand, shall be trained and informed on how to work safely and responsibly with AI. Therefore, it is crucial for employers to create awareness and train HR and managers sooner than later.

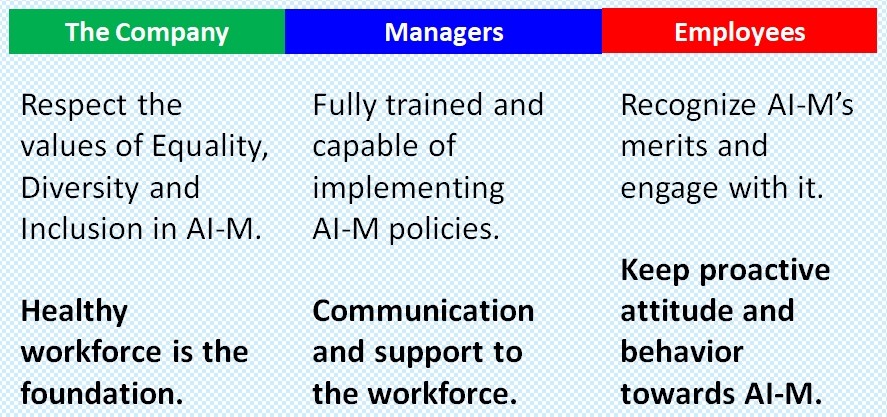

In conclusion, while stakeholders will have to apprehend their new role, we truly believe that if properly conceived, AI can help create a healthy and inclusive workplace, and that the employer, managers and employees will all have to play different roles and an important part in attaining this goal. Clear guidelines should be established to maintain a fair and inclusive environment. Ultimately, when the employers, employees and managers team up and strive to make their workplace more inclusive, everyone wins!